Robotics Engineering Practicum: Leveraging 3D Semantic Instance Segmentation for Robot Manipulation Task

Background The pick-and-place task stands out as one of the most common manipulation tasks for robotics arms, encompassing object detection and motion planning for efficient item picking. The key focus of object detection is to accurately segment different types of items, typically achieved through 2D-based approaches. However, these 2D models exhibit inherent limitations in scenarios where the depth information is required to differentiate objects with similar colors and shapes, prompting interest in further exploring 3D Deep Learning frameworks and their applications in the industry.

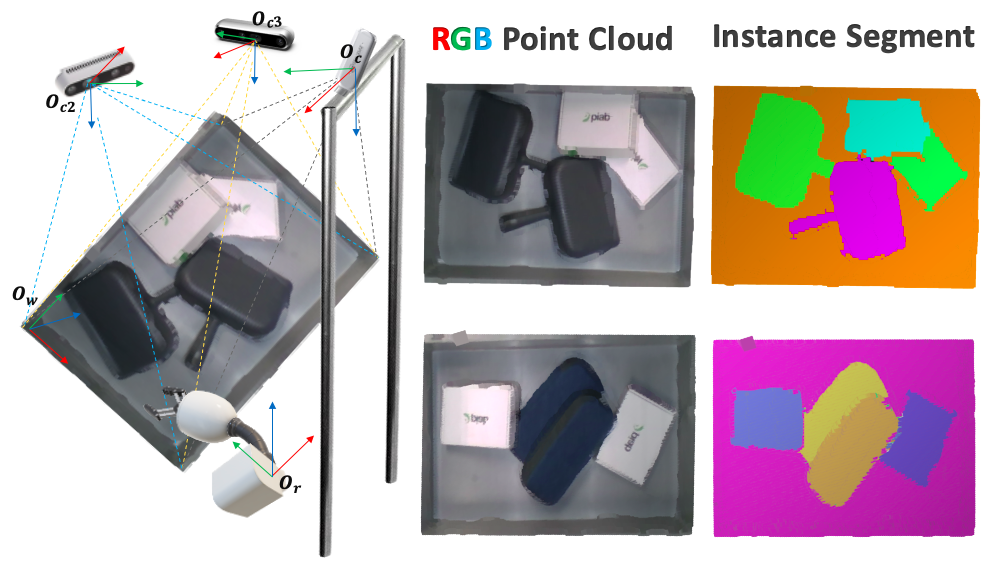

Pick-and-place Robot

Overview During my internship, I leveraged the available resources to explore state-of-the-art Deep Learning (DL) frameworks. After analyzing different metrics, such as model architecture, pros and cons, and ease of deployment, I integrated the Mask3D model into production use. The implementation involves model testing with benchmark datasets, establishment of a local training pipeline, and model deployment on Nvidia Jetson platform for accelerating the inference process. The final results have been highly promising, showcasing the potential for incorporating 3D DL models into warehouse automation for industry-specific applications.

Summary

- Explore cutting-edge DL neural networks in 3D semantic instance segmentation to provide alternatives for current model and set up high-performance training pipeline locally

- Innovate a 2D Image-based approach for 3D point cloud data labeling and batch data generation

- Configure and build a framework on Nvidia Jetson for testing and optimizing model inference process

- Organize and document for the code while keeping records of each testing and experimental step

Result

Integrated Object 3D Segmentation Pipeline for Pick-and-place