Using Reinforcement Learning to Provide More Robust Congestion Control under Virtual Network Environment

Background Congestion Control, serving as a vital link between high-level internet applications and low-level physical network adapters, has been a extensively researched subject for several years. Some traditional approaches, such as TCP Cubic and Reno, have proven successful in specific industries, aiming to uphold system stability and optimize data transmission efficiency within computer networks. However, these model-based methods heavily reply on pre-defined parameters, exhibiting their lack of flexibility to work in modern dynamic networking environments compared with other data-based schemes.

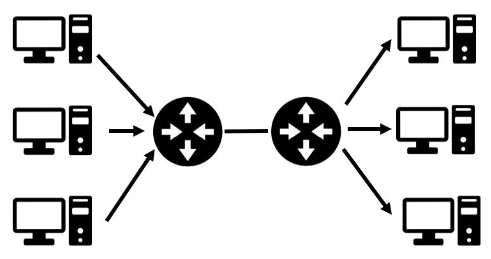

Overview In this project, I implemented a framework which ultilizes Reinforcement Learning (RL) algorithm to deal with Congestion Control problem. It also benefits from the Mininet emulator to generate data and feed the RL model. To narrow down the state space, I also tried with Deep Reinforcement Learning (DRL) which restricts the congestion policy. Additionally, the testbed is designed using distributed architecture to increase the training efficiency and fully leverage the computation resources. In the experiment, a dumbbell topology network is tested and the result indicates the RL-based approach outperforms classical TCP Cubic model, which is promising.

Summary

- Design and implement a distributed RL-based framework to train data-based model for networking congestion control

- Provide and extend the interfaces in Mininet virtual environment for testing different topologies

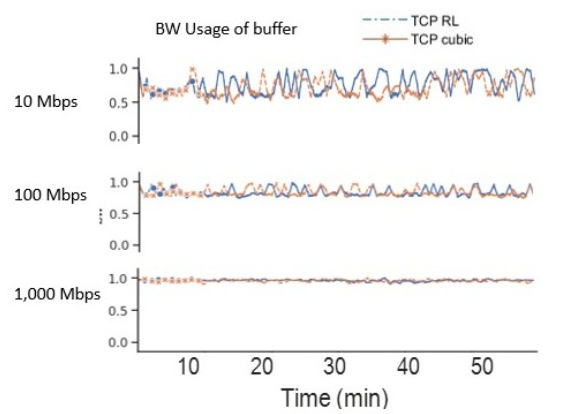

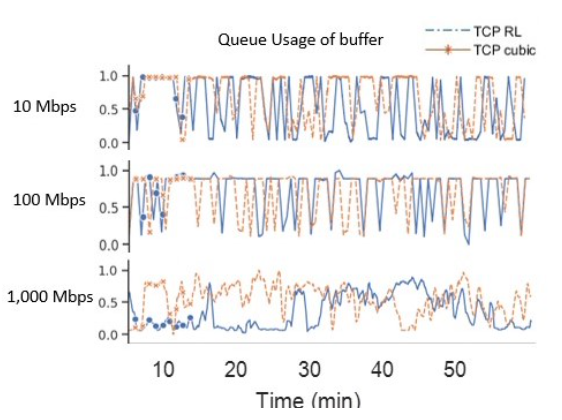

- Take Bandwidth and Router Buffer Usage as the network performance metrics

- Deploy Dumbbell Topology and compare performance using RL scheme with TCP Cubic

Result

Dumbbell Topology